Open-source - The future of AI?

Over the past few weeks, we’ve made a big leap at Delphi: hosting our own LLMs. Unlike only using commercial models (OpenAI’s GPT-4 and Anthropic’s Claude), self-hosting gives us more flexibility and control over customer data.

One of the most common questions we get is whether Delphi can work without relying on companies like OpenAI. As of today, the answer is “yes.”

(As a side note, OpenAI has a strong data use policy, that includes not training on customer data and only retaining it for 30 days for abuse monitoring purposes. We’ve found that this works well for many companies, but there are others who are uncomfortable connecting to a third party like OpenAI at all).

Now that we’ve had some time working with open-source models, we wanted to give our perspective on them. A few important questions:

Are open-source models the future of AI? Or will everything just flow to companies like OpenAI (or Google), with the biggest, most cutting-edge models?

How well do they work? And are they getting better?

How can I try out open-source LLMs for myself?

Are open-source models the future?

We think so. Especially at the enterprise level, companies want control over their own data. We’ll likely see entire models sitting in enterprises’ clouds, trained on proprietary data. If these can get good enough to rival models like GPT-4 (or GPT-5), there’s a good chance they’ll be widely adopted. And if they can be trained and run easily and cheaply enough, even smaller companies may adopt them as well as large enterprises.

At the same time, closed model providers like OpenAI will always have an advantage: they can simply spend more and train larger models with more data than anyone else. Supposedly, GPT-4 cost over $100 million to train and has 1 trillion parameters. The next generation of models will be even larger.

To some extent, open-source models will always be playing catch-up. But the trick is that they don’t need to perform as well as closed models on everything. Instead, they can be trained or fine-tuned on specific tasks.

How well do they work?

At Delphi, that’s exactly what we’re doing: training the best open models on data-specific tasks like generating queries, interpreting data, and building charts.

We’ve been pleasantly surprised at the results. Using state of the art models like Falcon-40B, we’ve seen results similar to ChatGPT (gpt-3.5-turbo) on these tasks. With more training, we believe we can achieve performance equal to or better than GPT-4 shortly, with quicker response times and lower costs.

That said, open-source models still lag behind the big closed models in general. Where closed models like GPT-4 really shine is general purpose reasoning. Even the best open models still feel like they’re just completing text with the highest-likelihood next word. But talk with GPT-4 and ask it a complex question that requires a mental model of the world, and you can almost feel it reasoning its way through the problem.

How can I try out open-source LLMs?

If you want to try open-source LLMs, here are some options:

On HuggingFace, many open-source models are available to try via their free “Hosted Inference API”. You can make calls to the API itself, or use the UI HuggingFace provides to try them out on your own prompts. Give it a try here on Falcon-7B!

To try (smaller) open models at home, download GPT4All. It makes it really easy to get some of the best open-source models running locally on your Mac or PC. I’ve had a lot of fun trying different ones and seeing what they can do.

For training and deploying models to production, there are almost too many options to list. HuggingFace offers inference endpoints. Alternatively, the big cloud providers have options like AWS SageMaker and Google Vertex (or just spinning up a VM with a bunch of GPUs).

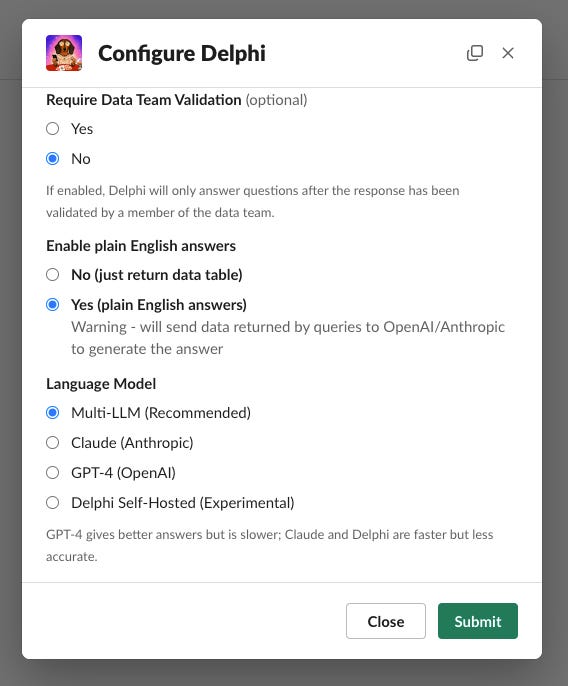

Or, if you use a semantic layer like Cube, dbt, or Looker, and want to try an open-source model on your own data, sign up for Delphi! Delphi connects AI to your company’s data to make it really easy to query and explore. At setup, you can choose “Delphi (Experimental)” as the language model and then see what it can do.

Let us know your thoughts and feel free to get in touch at founders@delphihq.com if you want to try Delphi!