Delphi is Multi-LLM!

The new enterprise architecture buzzword

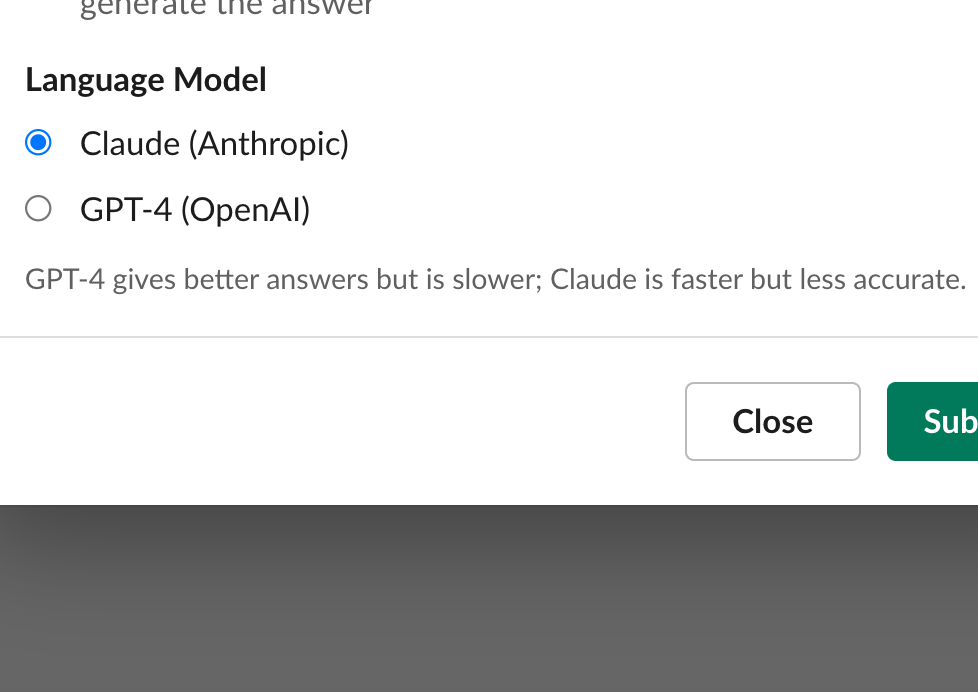

You can now choose between Anthropic’s Claude Model and OpenAI’s GPT-4 when configuring Delphi:

This is just the first step on our journey towards offering more choice in LLMs to our customers. We have recently been accepted onto the Google Cloud Platform AI Startup program and will be looking to include some of GCP’s LLMs shortly.

Different LLMs and their APIs offer different trade-offs. We’ve found that Claude provides much faster answers than GPT-4 but is slightly less accurate than GPT-4. This way, data teams can customise Delphi for the needs of their business.

In fact our users have so enjoyed the speed of Claude, that we’ve made it the default choice on setup.

We keep a very close eye on the open source situation, too - we know that, for many companies, it would be better if we were hosting the LLM on our infrastructure (or even in theirs) in order to guarantee that higher level of control. It will also allow us to offer sub-second latency that is not possible today from Claude or GPT-4.

The emerging field of LLM-Ops will also provide further ways for us to use multiple models in a nuanced way, perhaps even in the same workflow.

If you’re interested in trying out Delphi, go to delphihq.com and click “Get Started”! 🦾